Jeff's Notebook: Optimally tune an algorithm, read Superscalar Programming 101 (Matrix Multiply) !!

This Summer, one of the Intel's Black Belt Software Developers, Jim Dempsey, published a multi-part article on the Parallel Programming Community to help developers to enhance their Parallel Programming Skills: Superscalar Programming 101 (Matrix Multiply). Jim Dempsey discusses how to optimally tune a well known algorithm. He takes this algorithm and showcases the common method for parallelizing this algorithm and then he outlines several different approaches to parallelizing this algorithm. The final method produces a fully cache sensitized approach to parallelizing this algorithm. Jim's article is very detailed with some great code examples that I think you will find very useful. He has gotten some good reviews by developers that have read this article. I'd like to recommend reading this multi-part article.

- Superscalar Programming 101 (Matrix Multiply) Part 1 of 5

- Superscalar programming 101 (Matrix Multiply) Part 2 of 5

- Superscalar programming 101 (Matrix Multiply) Part 3 of 5

- Superscalar programming 101 (Matrix Multiply) Part 4 of 5

- Superscalar programming 101 (Matrix Multiply) Part 5 of 5

Intel® Performance Counter Monitor - A better way to measure CPU utilization

The complexity of computing systems has tremendously increased over the last decades. Hierarchical cache subsystems, non-uniform memory, simultaneous multithreading and out-of-order execution have a huge impact on the performance and compute capacity of modern processors.

“CPU Utilization” measures only the time a thread is scheduled on a core

Software that understands and dynamically adjusts to resource utilization of modern processors has performance and power advantages. The Intel® Performance Counter Monitor provides sample C++ routines and utilities to estimate the internal resource utilization of the latest Intel® Xeon® and Core™ processors and gain a significant performance boost

When the CPU utilization does not tell you the utilization of the CPU

CPU utilization number obtained from operating system (OS) is a metric that has been used for many purposes like product sizing, compute capacity planning, job scheduling, and so on. The current implementation of this metric (the number that the UNIX* “top” utility and the Windows* task manager report) shows the portion of time slots that the CPU scheduler in the OS could assign to execution of running programs or the OS itself; the rest of the time is idle. For compute-bound workloads, the CPU utilization metric calculated this way predicted the remaining CPU capacity very well for architectures of 80ies that had much more uniform and predictable performance compared to modern systems. The advances in computer architecture made this algorithm an unreliable metric because of introduction of multi core and multi CPU systems, multi-level caches, non-uniform memory, simultaneous multithreading (SMT), pipelining, out-of-order execution, etc.

When the CPU utilization does not tell you the utilization of the CPU

CPU utilization number obtained from operating system (OS) is a metric that has been used for many purposes like product sizing, compute capacity planning, job scheduling, and so on. The current implementation of this metric (the number that the UNIX* “top” utility and the Windows* task manager report) shows the portion of time slots that the CPU scheduler in the OS could assign to execution of running programs or the OS itself; the rest of the time is idle. For compute-bound workloads, the CPU utilization metric calculated this way predicted the remaining CPU capacity very well for architectures of 80ies that had much more uniform and predictable performance compared to modern systems. The advances in computer architecture made this algorithm an unreliable metric because of introduction of multi core and multi CPU systems, multi-level caches, non-uniform memory, simultaneous multithreading (SMT), pipelining, out-of-order execution, etc.

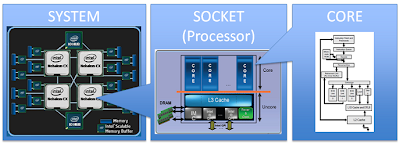

The complexity of a modern multi-processor, multi-core system

A prominent example is the non-linear CPU utilization on processors with Intel® Hyper-Threading Technology (Intel® HT Technology). Intel® HT technology is a great performance feature that can boost performance by up to 30%. However, HT-unaware end users get easily confused by the reported CPU utilization: Consider an application that runs a single thread on each physical core. Then, the reported CPU utilization is 50% even though the application can use up to 70%-100% of the execution units. Details are explained in [1].

A different example is the CPU utilization for “memory throughput”-intensive workloads on multi-core systems. The bandwidth test “stream” already saturates the capacity of memory controller with fewer threads than there are cores available.

A different example is the CPU utilization for “memory throughput”-intensive workloads on multi-core systems. The bandwidth test “stream” already saturates the capacity of memory controller with fewer threads than there are cores available.

Snips :

No comments:

Post a Comment